The following outlines the success Chaitanya Baddam (Director / Lead IT, John Hancock) and her team had with delivering digital experiences via Salesforce Communities by challenging the routine approaches of data integration. The goal here is to dissect the various data integration capabilities John Hancock used to add value to the end user experience for its consumers and producers, as well as the technicians who delivered it.

To achieve system integration, we find ourselves asking the same two questions: (1) “Hey, do you have an API for that?” and/or (2) “Hey, can we move the data over here?” And while those are still very helpful and critical questions, the landscape has evolved beyond just tapping into APIs or moving data. We will now dive deeper into seeing how, and why, focusing on actual integration utilities is a modern and more effective approach.

Background on the initiative

At John Hancock, our mission is to make decisions easier and lives better for all our customers. In this digital age, consumers expect simple, seamless experiences, with all their information at their fingertips. While the insurance industry has been slow to adopt modern, customer-first technology, there is a real opportunity for insurers to play a bigger role in customers’ lives by providing more personalized digital solutions.

Consumer portal:

When our team received the project to enhance the self-service capabilities on our customer portal, we began in-depth research and built a survey. Before investing in technology, we asked ourselves whether people would be willing to go to a web portal to view their payments or change their address. Our findings deemed that the end users were very technology savvy and there was indeed a desire for an enhanced web portal.

Thus, we built a customer web portal using Salesforce Communities and launched the website in April 2019. Prior to this, customers had to use the legacy website or contact the call center to process their requests. We are serving 200% more customers as of April 2020 and expect that number to increase. With this portal, customers who own John Hancock policies can login to a secure website; change their address or other personal information; submit inquiries online; view their policy, beneficiary, rider and payment information; and view their interaction with John Hancock support over the years.

Producer portal:

Not all customers maintain their own policies, in which case an authorized third-party producer, broker/agent or firm support resource helps the customers with this. Those roles also perform separate functions to maintain customer relationships and provide advice. In parallel, as part of a risk mitigation and modernization effort, John Hancock was planning to replace older web assets in its portfolio. To satisfy both needs, we built another Salesforce Community, called Producer Portal, which mitigates these risks. Prior to the Producer Portal, a legacy website was used to view all the policies maintained by a broker/agent or firm support resource to update beneficiary information and manage their funds and payments online. John Hancock launched the new producer portal in July 2019, built using Salesforce Communities. The producer portal provides new features along with legacy ones, all while mitigating the overhead and risks.

John Hancock’s employees:

To aid and enrich these portal experiences, John Hancock leveraged Salesforce Service Cloud to help customer service and call center teams. We used the power of the Salesforce platform to develop all of these experiences geared toward consumers, producers and our internal customer-facing teams, while reaping the benefits of ease of build, increased security and new features added 3 times per year.

A visual of the end result:

Below is an example of a consumer’s view of the portal when they log in. With some information redacted, you see that a logged-in end-user can see their detailed policy information and all that’s associated with it. This is important because while it feels native to the consumer, a vast majority of this information is being fetched in real-time from other systems. Hence why this visual serves as context and aid to the integration capabilities we’ll depict.

Using external objects to deliver experiences faster

The goal:

How do we get the information from our insurance policy administration systems to the hands of our user base as well as our consumers and producers in a digital experience? We already had some of the data in Salesforce since we were using it for call center purposes, where we had basic information needed to assist customers. But we needed to expand; we used over a dozen different policy systems, which are the ultimate source of truth for detailed information. The goal was to expose information in a way that’s as seamless as possible to consumers and producers.

How we arrived at Salesforce Connect:

Traditionally, data would be updated and imported through overnight data loads via ETL tools; that way, Salesforce is up to date and current when the customer accesses it the next day. This approach requires the data to be copied into Salesforce, and it can quickly become stale. Knowing that this wouldn’t suffice, we looked into Salesforce Connect. It’s a tool that creates “External Objects” within Salesforce and maps those external objects to data tables in external systems. Thus, you avoid copying data into Salesforce and you’re still able to access real-time data on demand.

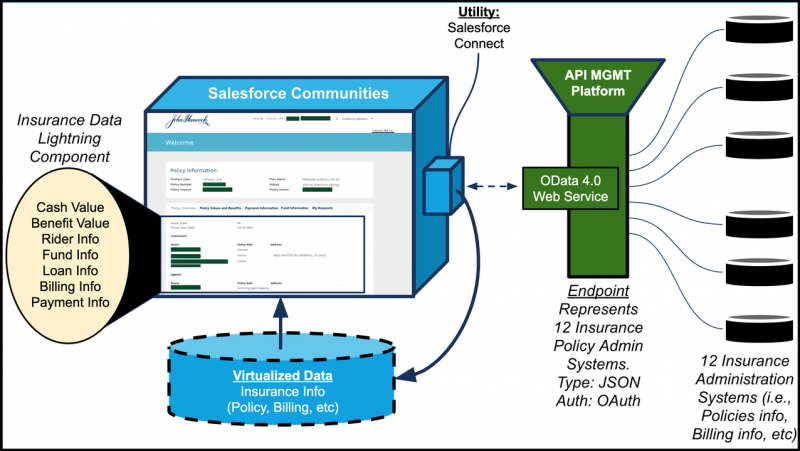

From a development perspective, an alternative process would have been fetching data from multiple admin systems through writing web services. Web services need custom code to parse the data and display it in the user interface for these portals. On the other hand, Salesforce Connect does not require much custom code to connect to external databases, making it a scalable and an easily maintainable approach. This is what we ended up implementing for our consumer and producer portals:

The result:

We were able to access real-time data from multiple sources seamlessly! The Insurance policy admin systems you see on the right-hand side of the image above become represented as “Virtualized Data” on the left. All you need to do is create digital experiences using the Salesforce platform by accessing that external data as if it were part of the native data model. For a rich front end (that displays insurance information on the left part of the diagram), we implemented Lightning Component front-end code which then taps into the Apex controller for business logic, at which point it runs a SOQL query against the external data. The value here is that you deploy it and can re-use it everywhere, rather than writing a bunch of point-to-point REST code that balloons up your code.

Taking advantage of Platform Cache

The goal:

As outlined above, we solved real-time access in a scalable way – but there is a caveat. Whenever the portal user switches to a different tab, a request is sent to the external system to fetch the data. This increases the number of round-trip calls to these external systems and thus increases the wait time for the user.

How we arrived at Platform Cache:

Based on the assumption for our insurance data that the policy information does not change in a matter of a few minutes, the data fetched with the user’s session would likely not change. To enhance the user experience, we have utilized the cache mechanism provided by Salesforce, called Platform Cache.

The result:

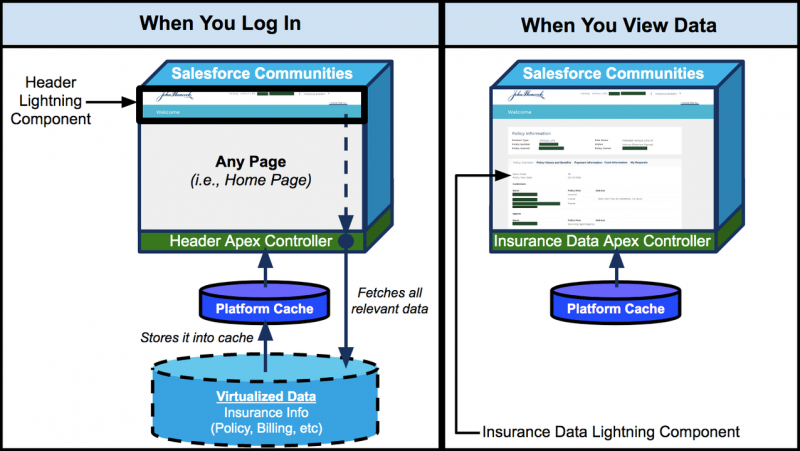

Upon implementing Platform Cache, the number of round-trip calls to external databases was reduced by 60% per user. The end user has a seamless experience without many hops between the systems on the backend. The diagram below depicts how we solved for this.

The diagram shows two parts; the first is when the end user logs in, on the left. Upon login, the user hits our page header in Salesforce Communities, which is a Lightning Component. As it should, this header resides on every portal page, but it isn’t just a header – it’s also a conduit to the caching service. In the background, the header Lightning Component reaches to the Apex controller which then takes all the virtualized data in External Objects (powered by Salesforce Connect) and stores it into Platform Cache. All the while, the user’s experience is unaffected.

The beauty of this comes when you look on the right hand-side of the diagram. Now the end user is doing various things on the portal and viewing their insurance data, but no hops or round trips happen on the backend. Keep in mind, that this in and of itself, also shows the value of Salesforce Platform. Normally, you’d have to use a caching service such as Redis, but Salesforce has a cache built right into the Platform – no wiring needed. Below is how quickly you can invoke it and have it work with virtualized data from external objects. This is an Apex controller to the Lightning Component found in the header.

Utilizing Platform Events to achieve greater flexibility

The goal:

Customer experience is the crux of everything we do. A customer does not have to wait for a job or event to be complete, especially without knowing how long it will take. When a customer or producer logs into the portal, we run a complex check and balance to see if the user indeed has access to view the policies, based on certain rules. Sometimes these rules take a while to run based on the number of policies the agent is servicing.

How we arrived at Platform Events:

We have utilized Salesforce Platform Events to notify a user once a job is complete. Instead of having the user wait while the checks are running, we create a Platform Event, which triggers an email send to the user informing them that the calculation is complete and shows them relevant information upon login. The result looks like this:

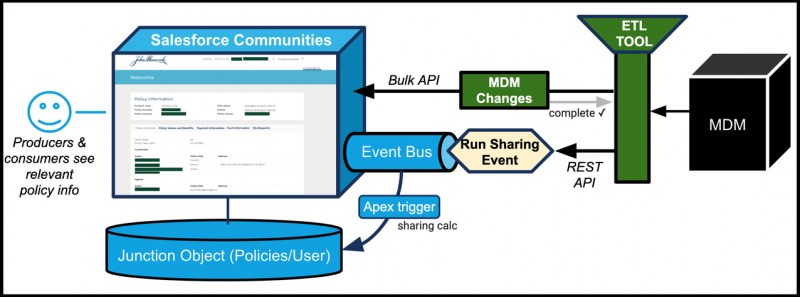

But why is this integration related? Well, this is the override to the batch-based Master Data Management (MDM) integration that occurs. Here is what happens: Several times per day, we use Platform Events to synchronize information about our consumers and producers through the MDM system. MDM has information that our portals need to figure out what insurance policies the user has access to. We keep the information in sync this way:

The result:

Let’s start off with the diagram directly above, observing it right to left. MDM changes get picked up by an ETL tool which uses the Bulk API to push all the information about the user and policies into Salesforce. Upon completion, the ETL tool publishes a Platform Event through the REST API. Let’s call it “Run Sharing Event” as the diagram depicts. Run Sharing Event has an out-of-the-box API URL, where this notification from the ETL tool is sent. Once the Run Sharing Event is published through an API call, a Salesforce Apex Trigger subscriber fires and calculates the insurance policy access and other information; it settles it in a junction object that ties policy information to the user. Then, any consumer or producer can see all the relevant policy information they are allowed to see.

Let’s say the user doesn’t have the latest information about policy access reflected in the portal. No worries! As the diagram prior to this one shows, that calculation is run when the producer or consumer logs in. That way, we get something in the hands of the end-user faster, while a sync with MDM has yet to happen or is in the middle of settling.

Benefits of Platform Events:

The Platform Event infrastructure helps tremendously here. First, the volume. Whether it’s end users logging into the portal or ETL jobs completing, the decoupled processing capability of Platform Events allows for this intake on the platform without having end users need to wait. It allows for it all while removing the burden of synchronizing the system to validate the entire operation. This decoupling makes these operations asynchronous, while everything runs smoothly.

In addition, Platform Events doesn’t have to stem from data manipulation (DML); as we saw in our earlier example, we are kicking the event off when someone logs in, rather than waiting on a data update to occur. This is demonstrated in the diagram above. We can hang processes and capabilities on Platform Events and replay them if a connection is broken, which is often impossible, or at best, cumbersome to do so otherwise.

While these things are subtle to think about, they equip you for handling things at scale related to performance and productivity of the technical team. Here is another example of how we think about utilizing Platform Events whenever possible.

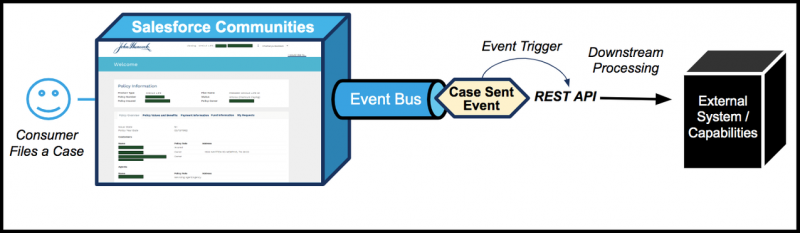

As shown in the diagram, when a consumer files a case on the portal, we publish a Platform Event immediately. From this “Case Sent” Platform Event that represents a case filing, an Apex trigger fires an API to the downstream systems, which then subscribe to this event and act upon the case. Traditionally, we had jobs running at certain intervals to pick up new cases and send them to downstream systems. However, with Platform events, we’ve been able to step up our game when it comes to customer service. A Platform Event allows us to take the burden off the system by letting only the relevant critical parts be completed synchronously, while the rest of the work hangs off the Platform Event to be completed asynchronously. There is even a native capability that allows you to choose whether a Platform Event gets published after a transaction is committed or as soon as the publish call executes (see the How-To here).

Additionally, though we’re not utilizing this capability just yet, one can subscribe to Platform Events using long polling. This is valuable if many systems were to subscribe here because there would be less point-to-point REST API work to write. The platform would broadcast the event (aka “Case Sent”) and a number of systems would subscribe to it and pick it up. This achieves the publish-subscribe pattern. Using long polling would allow for events to be replayed if a connection breaks, which is also very valuable. Again, this goes back to making integrations scalable. It’s not about “Hey, do you have an API for that?” but thinking two steps ahead to let the user achieve the best digital experience possible.

The technology utilized by Platform Events is Kafka, which is embedded in the platform and allows the user to achieve these types of use cases. Technologies are abstracted so the user can continue to reap the benefits of what Salesforce provides without needing to maintain the infrastructure. Today Kafka and Redis are just examples of under-the-hood technologies, but tomorrow it can be something else – the takeaway is that infrastructure is seamless and unnoticeable to the consumer who is developing on the platform. They can take advantage of these capabilities and truly focus on their end goals.

Concluding thoughts

How do we keep up with all this good stuff?

It’s easy to miss these powerful new tools and features if you don’t have an established framework to keep up with them and anticipate them ahead of time. We at John Hancock take this seriously, and thus have a formal approach to keeping up with new features, which allowed us to be proactive and take advantage of the tools described here to accomplish our goals in a better, faster way.

First, we have a team of half a dozen folks from various divisions that digests these new features; kind of like a committee. They have a review meeting one week after the preview release notes go out, which occurs long before the actual release date. Then, the group schedules two hour-long meetings, one with various business counterparts and another with technology counterparts. That way, they enable the business to take advantage of new application features and enable technology to take advantage of features that can help build capabilities. We have an internal social communication channel – comprised mostly of Salesforce practitioners at John Hancock – to help crowdsource the good things people see in preview release notes and upcoming release capabilities. These examples are discussed during the two meetings, and anything deemed worthy of being put into play then gets turned into user stories.

And how do we think about these integration utilities?

Clearly, the land of data integration utilities has grown a lot and is only continuing to grow. One integration utility can’t solve every use case, nor is that ever the expectation. However, having a map of where each utility is best applicable, specific to all your scenarios, can be quite helpful.

This is where Salesforce’s Trailhead program comes in. Trailhead allows you to learn these utilities and work with them hands-on. Here are some other utilities worth learning about:

External Services, Change Data Capture, and MuleSoft provide different angles on looking at integration while Big Objects provides insight on how to consider taking in some of the larger data and having it live natively in Salesforce. Composite Resources provides an interesting take on how to utilize APIs in a more efficient and effective way.

About the Authors:

Chaitanya Baddam – Director / Lead IT, John Hancock

Arastun Efendiyev – Principal Technical Architect, Salesforce