Do you know how your customizations will perform when the crowds show up? Confidence is hard-earned. We’re confident in our platform’s ability to scale and perform because we incorporate testing into our culture.

Develop a performance testing practice so you can be assured your solution will scale to meet your user needs without unwanted side effects.

This article is part two in a series on performance testing. The first article provides a general introduction. In this article, we’ll focus on the nuances of performance testing solutions built on the Salesforce Lightning Platform.

Tooling recommendations

The most common questions about performance testing are around selecting tools. The right answer for you depends on a host of factors: use cases, budget, available talent, time, and licensing and so on. There are a few generalizations you can make to simplify things.

Performance testing tools tend to have a focus area. Some specialize on simulating user interface (UI) navigation, while others focus on sending messages that are of specific protocols, like HTTP requests over TCP. In general, you can divide Salesforce test cases into two groups: UI tests and API tests. Consider adopting a specific tool for each group.

Salesforce Lightning provides a robust client-side experience. It does this in part by fully utilizing the web browser’s capability. Because of this, we recommend that you use tools that provide a full browser, DOM and CSS renderer for UI tests. Examples of this are LoadRunner, Selenium, and NeoLoad.

Testing APIs

Salesforce provides multiple platform APIs such as the REST, SOAP, and Bulk APIs. Additionally, developers can create REST and SOAP APIs with custom Apex code. Here are a few best practices for API related tests.

Picking tools

Use tools that support HTTPs over TCP for API testing. A good fit will enable working with all aspects of the message exchange such as response codes, headers, and the payload.

Don’t log in on every API request

API requests must authenticate just like users do when accessing Salesforce. An easy mistake is to include the login flow as part of the test request. Don’t do that. The test measurements will be thrown off and the login security rules might lock the test user account for making too many login attempts.

There are multiple authentication strategies that your Salesforce organization can use instead. Because of this, there may be some nuance to how you implement authorizing your API calls in the tests. In general, tests should leverage an OAuth flow that logs in with credentials and receives an access token. For more details on how to configure this, see the Salesforce documentation on authorization through connected apps and OAuth 2.0.

The key point is that before the actual tests, the automation should negotiate logging in and fetching an access token. The tests should then use the access token in all additional API requests.

Make your tests configuration driven

To drive the tests, many things are required: URLs, URIs, and authentication information, for example. Rather than hard code these data points, expose them as configuration options. The details of where and what you’re testing may change frequently. It’s easier to update a single configuration file than hunt through all the tests to find where values are used.

Reuse your data

Performance tests often require data to be submitted as part of the test. It’s tempting to randomly generate data on the fly, but there are drawbacks to that approach. To accurately draw conclusions about how the system performs, you want to minimize the things that are changing between test cycles. By using the same data set for all test runs, you can establish an apples-to-apples comparison.

Use a realistic org shape

A Salesforce org can be referred to as having a “shape”. This refers to the type and amount of data in the org, the sharing rules, role hierarchies, territories, automations, and customizations. It’s what makes your org different from all the others.

When conducting performance testing it’s recommended to use an org shape that is as close as possible to production. Use data volumes that are the same order of magnitude as the production org.

Sensible validation

To avoid false positives, use assertions to make sure that the system actually did what the test is expecting. Be careful not to overdo it, though. Having a large number of assertions can slow tests down and offset the actual measurements.

To balance between correctness and speed, we recommend that API tests assert on:

- The HTTP response code.

- The response payload contains the key elements.

Testing user experience considerations

In an ideal world, performance tests mimic real-world behavior. Often that means the tests need to simulate clicking through the UI the same way users do in their web browser. There are a few nuances to keep in mind when testing the UI.

CSS IDs cannot be used

UI testing tools use either XPath or CSS selectors to interact with web page elements. The common practice is to use an element’s ID to find the element. Lightning Web Components have unique IDs generated on every page load, which means this approach isn’t compatible with Salesforce applications. Selectors must be crafted that identify page elements by using criteria other than element ID.

Design test users to mimic actual users

When interacting with Salesforce, automated tests need a user account to authenticate with. The simplest approach to configuring tests is to simply create a new user that has wide access. This can then be reused for all tests.

While that is an easy approach, it’s recommended to set up test users that closely mimic the data access rights and system permissions of the users that the tests represent. Enforcing access rules requires effort by the platform. The performance tests should include that effort in scaling evaluations.

Don’t use your credentials in tests

Never use your personal login credentials for tests. Tests can spin out of control and attempt to login too many times. This can result in locking your user account. Rather, when testing APIs with a tool like JMeter, use a dedicated integration user account for the tests to authenticate with.

When testing the user interface with a tool like Selenium, do not use the same user account for each testing thread. As tests scale up, they can be distributed across multiple IP addresses. This may be flagged by your org’s security settings and result in the user account being locked. At a minimum, assign a user account per IP address that is responsible for generating traffic.

Collecting user interface metrics can be tricky

UI testing tools can be CPU intensive. When generating large amounts of load, the testing tool can slow down the machine that is running the load generation. This can result in measurements becoming skewed.

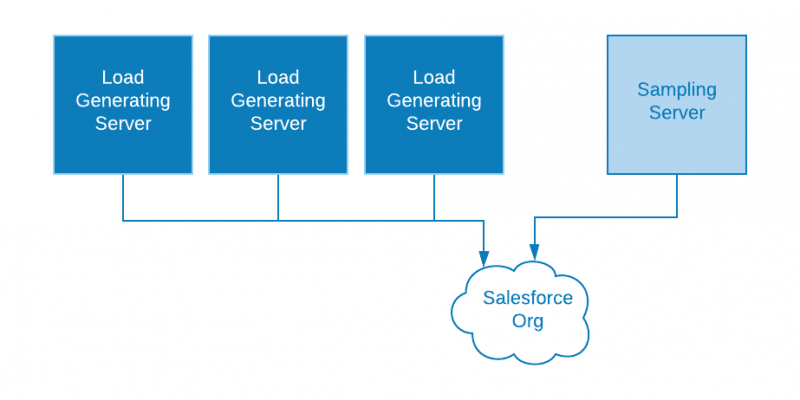

One way to avoid this situation is to divide the test responsibilities into two groups. You can see this in the following diagram. The first group is responsible for adding load on the system, while the second group is responsible for only taking measurements. This approach keeps the sample taking processors light and can result in a more accurate measurement.

Scaling tests

We’ve introduced the key concepts of performance testing and shared guidance on the most common things that arise when applying them to the Salesforce Platform. To wrap up, we want to address a final question: How do you scale up your test?

Over time, your testing strategy will likely need to scale-up to generate the load that represents future activity. The following sections provide a few concepts that will help you think about designing test infrastructure.

Distributed testing

The simplest way to scale a piece of software is by migrating it to a larger computer. This is called scaling vertically. Given enough time, your test plans might outgrow what can be managed with a single computer. To address this, just add more computers! This is called scaling horizontally.

Two computers can generate more load than one. Using three computers is even better. There is a catch, however. The additional capability comes at the cost of additional complexity. Growing the test infrastructure moves the test system from being a single application into a distributed system. Test runs and compiling results must be orchestrated somehow.

The image below visualizes a dedicated server that is responsible for test orchestration. This is sometimes referred to as a test coordinator or test scheduler server. Building a test coordinator from scratch is not a simple affair. Fortunately, many third-party testing solutions offer distributed testing configurations that help reduce the complexity of building your own.

Scaling journey

Distributed testing introduces complexity that isn’t required out of the gate. Every use case is unique, and therefore the testing needs are too. Early in the journey, a developer-grade laptop can accomplish a lot. However, it will quickly be outgrown. A dedicated server, with many cores and copious amounts of RAM, is a practical next step.

If a load testing server cannot meet the testing demands, there are many testing platforms as a service (TPaaS) that can provide rapid access to testing infrastructure. Some situations may call for establishing and maintaining dedicated testing infrastructure. You might consider IaaS and PaaS providers to provide the foundation on which to build a custom solution.

The natural transition from a laptop to a full-blown distributed testing platform is a journey. It is not one that most companies will make overnight. However, as you start ramping up your testing practice, it’s prudent to begin researching future testing needs and their related costs. Planning for future operational expenses, staff education, and process expansion is important to stay ahead of growing testing needs.

Conclusion

You’ve now read the fundamentals of performance testing and how you can apply them to the Salesforce Platform. As your organization grows, ad hoc observations can be replaced with thoughtful performance testing. And don’t miss the opportunity to communicate the results of your tests. Communication can help you establish confidence and trust with stakeholders.

Speaking of communication, talk to the folks that are already doing this. The best feature of the Salesforce Platform is the community of people that build it, support it, and use it. Be sure that you benefit from the collective wisdom of the community by engaging with the developer forums and in the Trailblazer Community Group.

You can combine Salesforce best practices with a mature performance testing practice to enable building for the future with confidence.

About the author

Samuel Holloway is a Regional Success Architect Director at Salesforce. He focuses on custom development, enterprise architecture, and scaling solutions across the Salesforce clouds. When he’s not building solutions at Salesforce, he enjoys tinkering with computer graphics and game engines. You can see what he’s up to at GitHub @sholloway.