Batchable and Queueable are the two predominant async frameworks available to developers on the Salesforce Platform. When working with records, you may find yourself wondering which one should you be using. In this post, we’ll present an alternative solution that automatically chooses the correct option between the Batchable and Queueable Apex frameworks — leaving you free to focus on the logic you need to implement instead of which type of asynchronous execution is best.

Let’s walk through an approach that combines the best of both worlds. Both Batchable and Queueable are frequently used to:

- Perform API callouts (as callouts are not allowed within synchronous triggerable code or directly within scheduled jobs)

- Process data (which it wouldn’t be possible to work with when synchronously calling code due to things like Salesforce limits)

That being said, there are some interesting distinctions (that you may already be familiar with) which create obvious pros and cons when using either of the two frameworks.

Batchable Apex is:

- Slower to start up, slower to move between Batchable chunks

- Capable of querying up to 50 million records in its start method

- Can only have five batch jobs actively working at any one given time

- Can maintain a queue of batch jobs to start up when the five concurrent batch jobs are busy processing, but there can only ever be a max of 100 batch jobs in the flex queue

Queueable Apex is:

- Quick to execute and quick to implement

- Still subject to the Apex query row limit of 50,000 records

- Can have up to 50 queueable apex jobs started from within a synchronous transaction

- Can have only 1 queueable job be enqueued once you’re already in an asynchronous transaction

These pros and cons represent a unique opportunity to abstract away how an asynchronous process is defined and to create something reusable, regardless of how many records you need to act on.

Let’s look at an example implementation, and then at exactly how that abstraction will work.

First, an example of the design in usage

This example assumes that you’re working with a B2C Salesforce org where it’s important for the Account Name to always match that of the contact, and there can only ever be one Contact per Account. Notice how in our example ContactAsyncProcessor, the only logic that needs to exist is exactly associated with this business rule:

Of course, this is a very simple example — it doesn’t show things like the Contact.AccountId being null, handling for middle names, and more. This example does show off how subclassing can help to simplify code. Here, you don’t need to worry how many results are returned by the example query, or whether or not you should be using a Batchable or Queueable implementation — you can simply focus on the business rules.

What does that AsyncProcessor parent class end up looking like? Let’s take a look at what’s going on behind the scenes.

Creating a shared asynchronous processor

To start off, there are some interesting technical limitations that we need to be mindful of when looking to consolidate the Batchable and Queueable interfaces:

- A batch class must be an outer class. It’s valid syntax to declare an inner class as Batchable, but trying to execute an inner class through

Database.executeBatchwill lead to an exception being thrown.- This async exception will only surface in logs and won’t be returned directly to the caller in a synchronous context, which can be very misleading since execution won’t halt as you might expect with a traditional exception

- A queueable class can be an inner class, but an outer class that implements

Database.BatchableandDatabase.Statefulcan’t also implementSystem.Queueable.

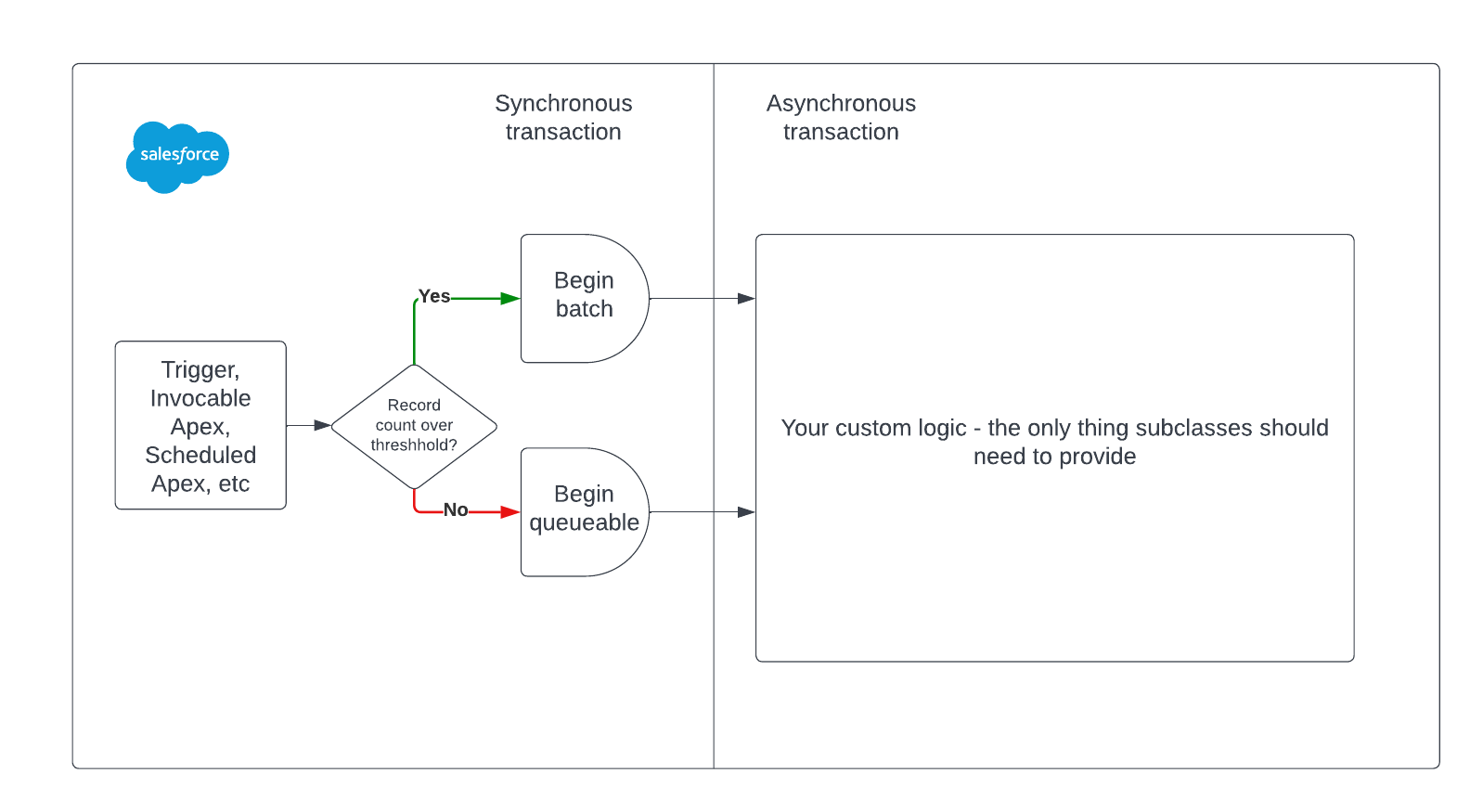

You want this framework to be flexible and to scale without having to make any changes to it. It should be capable of:

- Taking a query or a list of records.

- Assessing how many records are part of the query or list.

- Checking if you’re below a certain threshold — which subclasses should be able to modify — start a Queueable. Otherwise, start a Batchable.

This diagram shows what needs to happen synchronously versus asynchronously:

These limitations can help to inform the overall design of the shared abstraction. For instance, you should have a way to interact with this class before it starts processing records asynchronously — this is the perfect place for an interface.

Since the Batchable class needs to be the outer class, you can first implement Process there.

Don’t worry too much about the query and records instance variables, they will come into play soon. The crucial parts to the above are:

- The

AsyncProcessorclass is marked as abstract - The

innerExecutemethod is also abstract - The methods required for

Database.Batchablehave been defined - The

kickoffmethod has been defined, which satisfies theProcessinterface

By initializing a new subclass of DataProcessor, and then calling the get method, you receive an instance of the DataProcessor.Process interface:

- Either by providing a String-based query

- Or by providing a list of records

The most important part in the above is this excerpt:

The shouldBatch Boolean drives out whether or not it’s a batch or a queueable process that ends up starting up!

Finally, the AsyncProcessorQueueable implementation:

The queueable can also implement the System.Finalizer interface, which allows you to consistently handle errors using only a platform event handler for the BatchApexErrorEvent:

In summary, the overall idea is that subclasses will extend the outer AsyncProcessor class, which forces them to define the innerExecute abstract method.

- They then can call

kickoffto start up their process without having to worry about query limits or which async framework is going to be used by the underlying platform.- All platform limits, like only being able to start one queueable per async transaction, are automatically handled for you.

-

- You no longer have to worry about how many records are retrieved by any given query; the process will be automatically batched for you if you would otherwise be in danger of exceeding the per-transaction query row limit.

-

- Subclasses can opt into implementing things like

Database.StatefulandDatabase.AllowsCalloutswhen necessary for their own implementations. Since these are marker interfaces, and don’t require a subclass to implement additional methods, it’s better for only the subclasses that absolutely need this functionality to opt into that functionality (instead of always having them be implemented onAsyncProcessoritself).

- Subclasses can opt into implementing things like

Because, by default, subclasses only have to define their own innerExecute implementation, you are freed up from all of the other ceremony that typically comes with creating standalone Batchable and Queueable classes. Logic that’s specific to your implementation, such as keeping track of how many callouts have been performed if you’re doing something like one callout per record, still needs to be put in place and tested.

Here’s a more complicated example showing how to recursively restart the process if you would go over the callout limit:

As another marker interface example, here’s what using Database.Stateful looks like:

Notice the complete lack of ceremony in both of these examples. Once you have all of the complicated bits in AsyncProcessor, you get to focus purely on logic. This really helps keep your classes small and well-organized.

Unit testing the async processor

Here, we’ll just show one test which proves out a subclass of AsyncProcessor automatically batching when the configured limit for queueing has been exceeded. You’ll be able to access all of the tests by visiting the repository for this project.

Conclusion

The AsyncProcessor pattern lets us focus on implementing our async logic without having to directly specify exactly how the work is performed. More advanced users of this pattern may prefer to override information like the batch size, or allow for things like with/without sharing query contexts. While there are many additional nuances that can be considered, this pattern is a great recipe that can also be used as-is whenever you need to use asynchronous Apex. Check out the full source code to learn more.

About the author

James Simone is a Lead Member of Technical Staff at Salesforce, and he has been developing on the Salesforce Platform since 2015. He’s been blogging since late 2019 on the subject of Apex, Flow, LWC, and more in The Joys of Apex. When not writing code, he enjoys rock climbing, sourdough bread baking, and running with his dog. He’s previously blogged about the AsyncProcessor pattern in The Joys of Apex post: Batchable & Queueable Apex.