In today’s world of information abundance, quickly extracting insights from large content sources is more crucial than ever. AI-driven querying, made possible by generative AI and large language models (LLMs), lets users get answers directly from detailed data. Instead of sifting through long videos or text, you can now pose questions about your specific content and receive accurate answers.

This isn’t just a future concept — it’s gaining traction in software development. For developers, mastering this technique can change the way we use and think about digital content.

This blog post takes a look at how generative AI can query content. Specifically, it shows how you can use open-source tools LangChain and Node.js, to build a tool for asking technical questions about the content of YouTube videos, like those published on our Salesforce Developers channel.

Building AI applications

With generative AI, you can now use a conversational approach to explore data and get detailed results back. This capability, though, is typically ring-fenced by the LLM provider’s training data. As a content creator and avid learner, I primarily share knowledge through videos. However, finding a specific answer in an hour-long video can be challenging. Imagine if you could ask questions relevant to a video (or multiple videos) and receive structured, accurate answers based on the video content. This is possible with generative AI.

How can you unlock this capability? Must you train an LLM using the video content? As Christophe Coenraets explains in How To Unlock the Power of Generative AI Without Building Your Own LLM, there is no need. Instead, you can use a context-aware prompt.

The idea is to craft a prompt that holds the context pertinent to the question. But from where can you source this context? Video transcripts are a suitable candidate. This approach in the AI field is known as retrieval-augmented generation.

Retrieval-augmented generation (RAG)

Retrieval-augmented generation, commonly known as RAG, combines the strengths of both retrieval-based and generative methods in AI. Instead of the model generating answers purely from its internal knowledge, RAG first searches (or “retrieves”) relevant context from a large dataset and then uses that context to generate a more informed response. It’s like having a research assistant that quickly fetches the most pertinent information on a topic and then formulates a detailed answer based on that information. This blend of retrieval and generation helps to improve both the accuracy of AI’s response and its relevance to the specific query in context.

This process consists of three stages:

- Index

- Retrieve

- Augment

The index stage begins with loading the documents containing the relevant information via a document loader. These documents are then broken down into smaller chunks with a document chunker, making them more manageable for retrieval. Next, the smaller text chunks are transformed into vectors using an embedder. This conversion allows them to be searched efficiently using similarity search algorithms. Finally, these vectors, or embeddings, are stored in a vector store, making them readily available for future retrievals.

In the retrieval stage, the user’s question is converted into a vector using an embedder. Afterward, a similarity search algorithm is used to fetch the relevant text chunks from the vector store that match the question.

Lastly, the initial prompt is augmented with the retrieved context and forwarded to the LLM to obtain an answer relevant to that context.

Text embeddings and vectors

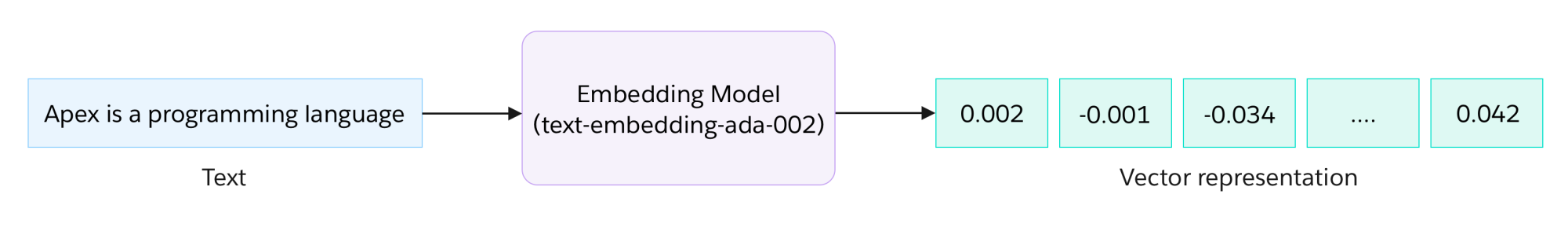

Text embeddings and vectors are used to represent textual information in a format that machines can easily process. At its core, a text embedding converts words or phrases into numerical vectors of a certain size. This transformation is crucial because while text is easy for humans to interpret, machines excel when data is in numerical form. The resulting vectors capture the semantic meaning of the text, ensuring that words with similar meanings have vectors that are close to each other in the vector space. By transforming text into vectors, algorithms can perform similarity checks, clustering, and similar tasks with greater efficiency and accuracy.

The example in this blog post is based on OpenAI, which provides various embedding models, with text-embedding-ada-002 being the most recent and most popular. Keep in mind that there are other tools available for generating embeddings like TensorFlow’s word2vec, GloVe, and those available through Hugging Face.

While it’s possible to craft custom code using various APIs and libraries for a RAG application, there are frameworks that consolidate all essential tools, supporting a range of models and integrations. Of these, LangChain is among the most prevalent in the AI ecosystem.

What is LangChain?

LangChain is a comprehensive toolkit designed for developers looking to integrate AI-powered language capabilities into their applications. At its core, LangChain simplifies the complexities of working with language models, offering a streamlined interface for tasks like text embedding, contextual querying, and more. Its modular and flexible design enables developers to pick and choose components that fit their specific needs. Whether it’s for processing large datasets or enhancing user interactions with smart responses, LangChain provides the essential tools and frameworks to enable language-based tasks in applications. LangChain is compatible with both Python and JavaScript.

Putting it all together

To see how LangChain works, let’s create an application, named ask-youtube-ai, that loads a list of YouTube URLs into an in-memory vector database, asks questions about the content of those videos, and provides the generated answers along with an indication of which videos contained the information. While this application serves as a basic demonstration of the concepts covered in this blog post, its components can be repurposed for more sophisticated applications.

First, create a directory called ask-youtube-ai, and create a basic Node.js project using npm init.

Note: Since you will be using ECMAScript modules, make sure your package.json has the property "type": "module" set.

Next, install the following dependencies:

- langchain – framework for AI applications

- openai – OpenAI official library for Node.js

- youtubei.js – gets metadata information from YouTube videos

- youtube-transcript – retrieves YouTube video transcripts

- faiss-node – AI similarity search library for Node.js

- dotenv – utility to read

.envconfiguration files

Note: You’ll need an OpenAI API key to run the example.

To wrap things up, create the index.js and .env files as detailed below. Each step is explained in the comments within the code.

index.js

.env

Note: Be aware that executing this script will consume your OpenAI free credits and may also incur charges.

Code highlights

Here’s an overview of the main steps in the code, which broadly aligns with the diagram above.

- Load the transcripts from YouTube using the

YoutubeLoaderdocument loader - Transform the transcripts using a text splitter into chunks of 1000 characters using

RecursiveCharacterTextSplitter - Get the text embeddings using the OpenAI embeddings API (

OpenAIEmbeddings) with the default modeltext-embedding-ada-002 - Store the embeddings into a vector database (this example uses an in-memory database called Faiss) with

FaissStore - Create an instance of a chat model using OpenAI

gpt-3.5-turbo-16kwithChatOpenAI - Create a prompt template with the grounding prompt that will include the retrieved context and the question with

PromptTemplate - Create a chain using the

RetrievalQAChainclass from LangChain to perform the retrieval-augmented generation - Ask questions and get answers with their respective source documents

Potential applications

Building on the techniques covered in this blog, you can craft applications such as:

- Enhanced knowledge bases and FAQs: RAG can be used to develop AI-driven chatbots and virtual assistants that tap into extensive knowledge bases or FAQs to provide detailed and contextually relevant responses to user queries.

- Research assistance: Academic and industry researchers can use RAG for literature reviews. The system can retrieve relevant studies or articles based on queries, and generate summaries or insights.

- Content recommendations: In media platforms, RAG can be used to suggest articles, videos, or songs based on user queries, making the recommendations more contextually relevant.

- Enhanced search engines: Traditional search engines can be enhanced with RAG to not just provide links but also generate concise answers or summaries for user queries.

- Interactive entertainment: In video games or interactive narratives, RAG can be employed to generate contextually relevant dialogs or story elements based on user choices or game progress.

And the possibilities don’t end there. Take some time to check out the wide variety of text and web document loader integrations supported by LangChain for more inspiration on the applications you can build.

Further resources

- Emerging Architectures for LLM Applications

- Retrieval Augmented Generation (RAG) Using LangChain

- OpenAI API

- OpenAI Embeddings

- LangChain.js YouTube Transcripts Retrieval

- LangChain.js Faiss Vector Store

- LangChain.js Retrieval QA

About the author

Julián Duque is a Principal Developer Advocate at Salesforce where he focuses on Node.js, JavaScript, and Backend Development. He is passionate about education and sharing knowledge and has been involved in organizing developer and tech communities since 2001.

Follow him on Twitter/X @julian_duque, LinkedIn, or Threads @julianduquej