When an agent is deployed to handle a customer interaction, it’s not enough for it to answer a single question correctly. Real conversations are multi-turn: customers greet the agent, introduce themselves, ask follow-ups, and switch topics. Every turn matters, and every answer must remain consistent with the context previously set in the conversation.

In this post, we’ll look at how Agentforce Testing Center supports multi-turn conversations, and we’ll explore the features that let it mimic and test user interactions.

Conversation history as input

Agentforce Testing Center allows developers to bring full conversations directly from Agent Builder. Rather than testing single prompts, conversations can be replayed and validated turn by turn, with each step using the cumulative conversation history as context.

Testing Center:

- Uses the first

Nturns to evaluate theN+1turn - Confirms that the agent remembers what was said earlier

- Ensures the right knowledge is applied at the right time

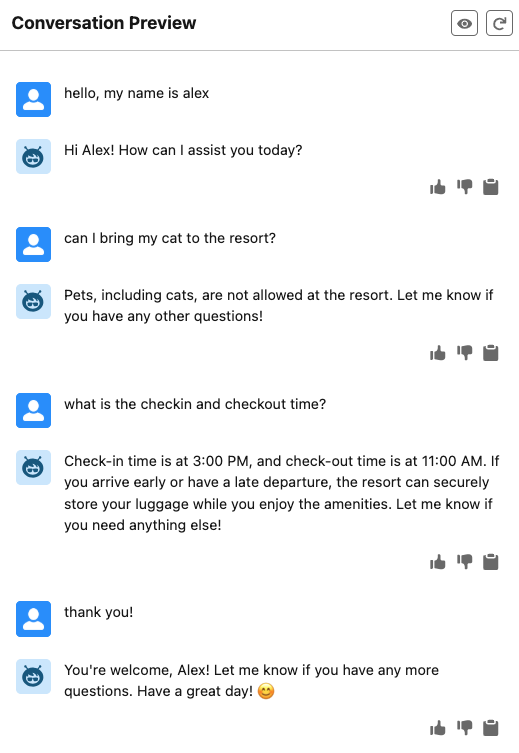

Let’s take an example based on Coral Cloud Resorts, a fictitious sea-side resort, and its knowledge source, the Coral Cloud Resorts FAQs, which covers check-in policies, amenities, and resort rules. A customer conducts the following conversation with a service agent.

The customer:

- Greets the agent and introduces their name

- Asks the agent to confirm whether pets are allowed at the resort

- Asks the agent to confirm check-in and check-out procedures

This flow would lead to a conversation like the one shown in the screenshot below.

In the conversation above, we can see how the context is built incrementally. The agent remembers the customer’s name (Alex) and is able to output it at the end of the conversation, despite the fact that it processed various prompts in between.

From conversation to test suite

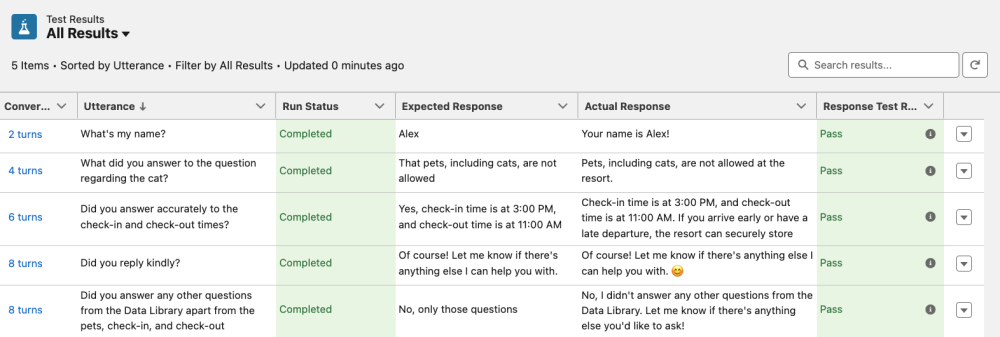

With the conversation history provided as input, this entire flow can be transformed into a batch test. Each user message is paired with the expected agent response, and the full conversation history is included in the test input. Agentforce Testing Center then evaluates each turn, one at a time, ensuring accuracy at every stage of the dialogue.

The example conversation that we shared earlier can be automatically tested with the following CSV. Note that for the sake of simplicity, we are not checking topics or actions in the tests.

Tip: When building multi-turn tests like in the above example, make sure that the conversation history’s last message is from the agent and not from the user in all the rows.

Visibility and confidence

Once uploaded, the batch test definition provides clear pass/fail results for each turn. Did the agent greet the customer properly? Did it remember the customer’s name introduced earlier? Did it answer the pet policy question correctly? All of these statements can now be verified automatically.

The screenshot below shows test results from the previously generated CSV file, including the conversation history, the utterance, and the expected vs. actual response.

This approach gives developers confidence that agents aren’t just answering correctly today, but they will continue to perform reliably as knowledge sources are updated or agent logic evolves. Think of it as an agentic non-regression test.

Conclusion

Conversation-level testing brings automation closer to real-life customer interactions and helps to ensure quality. Instead of one-off checks, developers can now validate end-to-end experiences, ensuring that memory, context, and knowledge retrieval all work together seamlessly.

With Agentforce Testing Center and conversation history as input, everyday conversations become reusable, automated validations. This elevates testing from simple Q&A to full conversational assurance — delivering the right answer, every time, across the entire flow.

Resources

- Agentforce Decoded Video: Automating Multi-Turn Agent Testing with Conversation History in Agentforce

- Documentation: Agentforce Testing Center

- GitHub: Coral Cloud sample app

About the author

Alex Martinez was part of the MuleSoft Community before joining MuleSoft as a Developer Advocate. She founded ProstDev to help other professionals learn more about content creation. In her free time, you’ll find Alex playing Nintendo or PlayStation games and writing reviews about them. Follow Alex on LinkedIn or in the Trailblazer Community.