Building enterprise-grade agents often involves a fundamental tension. Large Language Models (LLMs) are great at natural conversation, but they lack the predictability that enterprise workflows demand. You can prompt an LLM to verify a customer before sharing order details, but you can’t guarantee it will follow that sequence every time. Traditional prompt engineering doesn’t solve this. As you write longer prompts and add more instructions, the agent’s behavior can become less predictable.

Agent Script is Salesforce’s new language for building agents. It introduces hybrid reasoning, the ability to mix deterministic code with natural language prompts in the same instruction block. With Agent Script, business-critical logic executes reliably every time. Conversational elements stay flexible.

It’s also a context engineering tool. Rather than hoping the LLM picks up on what’s relevant, you programmatically construct the prompt it receives based on variables, conditions, and conversation state. The LLM only sees what matters for the current moment.

In this post, we’ll cover the language fundamentals and basic building blocks of an agent with Agent Script. You’ll learn the syntax, understand the flow of control, and see how Agent Script constructs prompts at runtime.

Language Basics at a Glance

Before we start building, let’s cover the foundational syntax elements you’ll encounter throughout Agent Script. Understanding these basics will make the rest of the blog much easier to follow.

Property-Based Syntax

Everything in Agent Script is expressed as key: value pairs, making the language readable and declarative.

Whitespace Sensitivity

Agent Script uses indentation to define structure, similar to Python. You must use spaces (not tabs), and consistent indentation is essential for the script to parse correctly. The recommended convention is three spaces per indentation level.

Note: If your indentation is inconsistent, the script won’t compile. Pay close attention to this when copying and pasting code.

Resource References with @

Agent Script uses the @ symbol to reference resources like variables, actions, topics, and outputs. This is how different parts of your agent communicate.

Note: A common mistake is assuming @ works like a standard Salesforce merge field where you can reference any org resource. @actions.get_order works only because you defined get_order within your topic’s actions:block. Something like @Account.Name won’t work because it’s not a block in your script or a built-in utility.

Comments with #

You can add comments anywhere in your script using the # symbol. Everything after the # on that line is ignored by the compiler. Use comments liberally to document your agent’s logic. Agent Script has no dedicated multiline comment syntax. For multi-line comments, use # at the start of each line.

Arrow Syntax (->) for Procedural Logic

When you need to write step-by-step logic — like conditionals, running actions, or setting variables — use the arrow syntax. This switches the block from declarative mode to procedural mode.

The arrow tells Agent Script: “What follows is executable logic, not just configuration.”

Note: : can also be used, but -> is common for clarity.

Pipe Syntax (|) for Prompt Text

The pipe symbol marks text that should be sent to the LLM as part of the prompt. This is how you provide natural language instructions.

You can also use the pipe for multiline strings outside of procedural blocks:

The key insight here is that logic executes deterministically, while pipe text is assembled into a prompt for the LLM. This separation is the heart of hybrid reasoning.

Template Expressions ({! }) for Dynamic Values

To inject variable values or expressions into your prompt text, wrap them in formula syntax {! }. At runtime, Agent Script replaces these with actual values.

In the above script, if customer_name is “John”, order_status is “shipped”, and order_total is 150, the LLM receives the below input:

Hello John, your order status is shipped.

Your order total is $150.

The LLM sees concrete values rather than variable names. This makes its job easier and your agent more reliable.

Note: Template values are not just variables. They can also be expressions using operators. Check out the documentation to learn more about supported operators (see here).

Slot-Filling with ...

When exposing an action to the LLM as a tool, you often want the model to extract input values from the conversation. The ... syntax indicates that the LLM should “slot-fill” this parameter based on user input.

In this example, the LLM determines the order_id from the conversation, pulls the customer_id from a variable, and sets a constant limit of 10.

Note: The ... syntax is exclusively for slot-filling in action inputs. Don’t use it as a default value for variables as this is a common mistake

Expression Operators

Agent Script supports a familiar set of operators for comparisons and logical expressions. You’ll use these in if statements and available when conditions.

Note: Agent Script supports + and - for arithmetic but does not currently support *, /, or %. If you need complex calculations, use a Flow or Apex action instead.

Conditionals

Conditionals (if/else) control which instructions execute or which prompts are assembled.

Note: else if is not currently supported. Use separate if statements instead.

The Essential Blocks

In your Salesforce DX project, Agent Script lives within the aiAuthoringBundles directory. For example, if you are building a ‘HelloWorld’ agent, your script resides in a .agent file at this path:

force-app/main/aiAuthoringBundles/HelloWorld/HelloWorld.agent

This file is the brain of your agent, containing the configuration, variables, and conversation topics we are about to explore.

Every Agent Script file is organized into blocks, which must appear in a specific order. Below is a bird’s-eye view of the top-level blocks in order.

Note: The language and connections are out of scope for this post, but we will cover a dedicated block on how to use connections.

Let’s walk through each block using an example agent. Let’s call it an ‘Order Management’ agent that looks up orders for customers.

1. config — Agent Identity

The config block defines basic metadata for your agent. At minimum, you need an agent_name. For Agentforce Service Agents that interact with external customers, you also need a default_agent_user. The default_agent_user is optional for Employee Agents.

The agent_name serves as the unique identifier for your agent.

2. variables — State Management

Variables allow your agent to remember information across conversation turns. This is crucial because LLMs don’t inherently remember previous exchanges — you must explicitly track state.

Agent Script features two types of variables:

- Mutable variables: these can be read and written by the agent. It is recommended to provide a default value for mutable variables.

- Linked variables: These are read-only and pull their value from an external context (such as the current session). They must have a

sourceand cannot have a default value.

To learn more about various supported data types, check out the official documentation (see here)

A few important notes:

- Boolean values must be capitalized:

TrueorFalse, nevertrueorfalse. - The

descriptionfield helps the LLM understand what the variable represents, which improves slot-filling accuracy. - It is recommended that mutable variables have a default value; linked variables never include one.

3. system — Global Instructions and Messages

The system block defines your agent’s personality and provides two required messages: welcome (shown when a conversation starts) and error (shown when something goes wrong).

Note: These instructions apply globally across all topics. However, you can override them at the topic level when a specific topic requires different behavior. Below is how you would override at the topic level. Topics are covered in more depth below.

When a topic defines its own system.instructions, they replace the global instructions for that topic. This is useful when different conversation areas require distinct personalities or behaviors.

4. start_agent — Entry Point

The start_agent block is unique. Every user message — whether it’s the first or the fiftieth — begins execution here. This is your agent’s front door.

Typically, you use start_agent to welcome users and route them to the appropriate topic based on their intent.

Notice the available when conditions. The go_to_order_status transition only appears as an option when the customer is already verified. This is how you control flow based on state.

Note: Actions and transitions are covered in separate sections below.

5. Topics — Conversation Areas

Topics represent distinct areas of functionality in your agent. Think of topics as chapters in a conversation: “verify identity,” “check order status,” or “process return.” Each topic has its own actions, instructions, and tools. Our agent will use two topics: verification and order_status.

The description is important — it helps the LLM understand when a topic is relevant, especially during topic classification in start_agent.

Key principle: Every user message starts at start_agent. From there, the agent transitions to the appropriate topic based on context.

6. Actions: Defining What a Topic Can Do

An action defines a capability — something the topic can call, such as a Flow, Apex class, or API. Actions are defined at the topic level in the actions: block.

Think of this as the definition layer: you are declaring which external interfaces exist and defining their inputs and outputs.

This tells Agentforce: “This topic has access to these two actions. Here is what they are called, what they require, and what they return.” To learn about target types and supported data types, visit the official documentation section (see here)

Simply defining an action does not mean it runs. It is like declaring a function; nothing happens until it is called. So how do actions get called? That’s the job of the reasoning layer.

7. Reasoning: The Orchestration Layer

The reasoning block is where you orchestrate how the topic behaves. It acts as the bridge between action definitions and actual execution.Every topic must have a reasoning: block. Inside reasoning, you have two key sections:

instructionsThe logic and prompts that execute each turnactions: The tools you expose to the LLM (the “exposure layer”)

Reasoning Instructions: Logic + Prompts

The instructions: block inside reasoning is where the orchestration happens. You write a mix of:

- Procedural logic (using

->or:): Executes deterministically from top to bottom - Prompt text (using

|): Gets assembled and sent to the LLM

The arrow -> signals “this is procedural code”.

Note: Using the arrow is optional and you can use just ‘:’. The pipe | signals “this is prompt text.”

When this executes:

- Agentforce processes the logic top to bottom.

- Prompt text accumulates based on which conditions are true.

- The final assembled prompt is sent to the LLM.

Scenario A: order_id = ""

Assembled prompt → “Help the customer with their order. Ask them for their order ID.”

Scenario B: order_id = "12345"

Assembled prompt → “Help the customer with their order. Their order ID is 12345.”

Notice you’re dynamically constructing the prompt based on state.

Note: All pipe text that passes conditional checks is appended into a single prompt rather than a sequence of discrete tasks. The LLM receives one unified instruction block. And here’s the key part: the reasoning loop re-executes these instructions with every turn. As variables change across turns, different conditions match, and the assembled prompt is rewritten accordingly. This ensures the LLM always receives a fresh, context-appropriate instruction set instead of a running history of past instructions.

Calling Actions from Instructions

There are two different ways to invoke actions:

Method 1: Deterministic Execution with run

Use run inside reasoning.instructions when the action must execute under specific conditions without requiring LLM judgment.

The run command:

- Executes immediately when the code path is reached

- Uses

withto pass inputs - Uses

setto capture outputs into variables

When to use: Automatic data fetching, required validations, or any logic that must occur regardless of user input.

Method 2: LLM Tool in reasoning.actions

Use reasoning.actions when you want the LLM to decide whether to call an action based on conversation context.

This creates a tool called lookup_order that:

- References the defined action (

@actions.get_order) - Has a

descriptionthe LLM uses to decide when to call it - Uses

...for slot-filling, allowing the LLM to extract values from the conversation

Captures outputs with set

When to use: User-initiated actions, optional features, or any scenario where conversation context should drive the decision.

Note: For brevity, the example above shows only one action, but a topic can include multiple actions. You can also reference these actions in the prompt with {!@actions}. See the example below.

Setting Variables

There are two common ways to set variables at runtime:

- Capture action outputs: Use set to store results from an executed action.

- LLM slot-filling: Use

@utils.setVariablesto let the LLM extract values directly from the conversation.

When to use: Use action outputs for data from external systems, Flows, or Apex. Use setVariables for collecting user input through conversation.

Flow of Control: How Execution Works

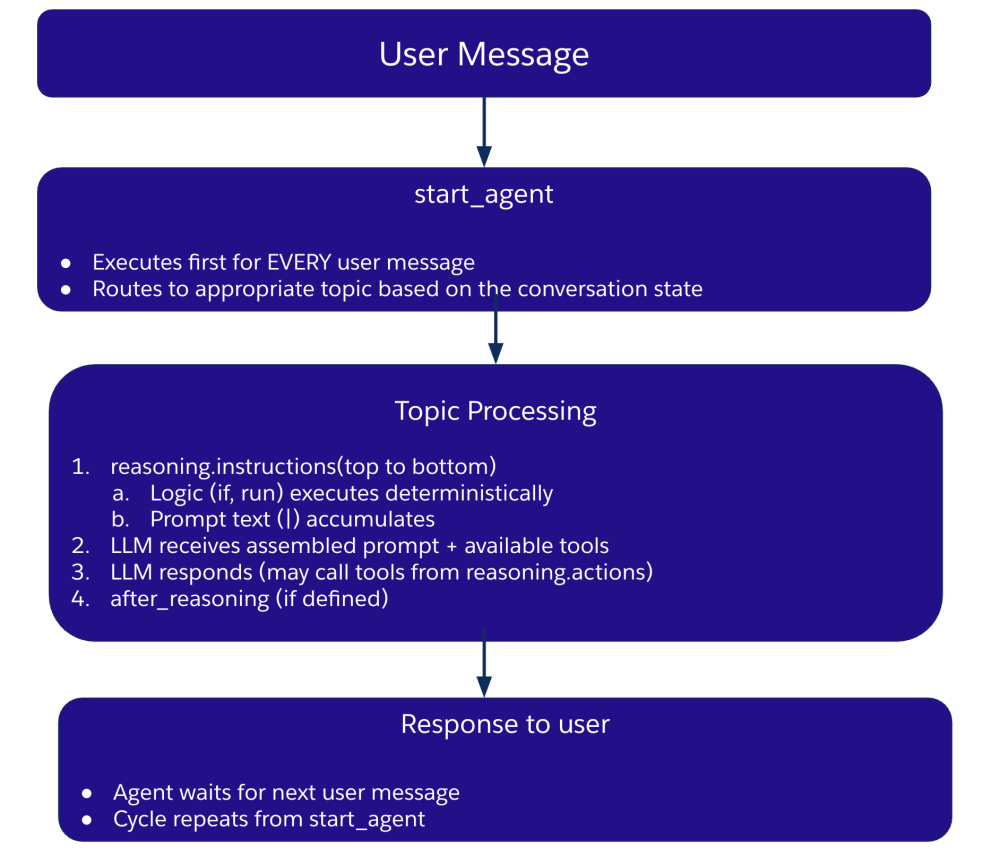

The diagram below shows how topics, actions, and reasoning work together at runtime.

Key principles to remember:

- Agent Script gives you hybrid reasoning: This combines LLM flexibility with programmatic control. The key mental models include:

- Topics: Conversation areas (what your agent can talk about)

- Actions: Definitions at the topic level (what can be called)

- Reasoning: An orchestration layer containing instructions and exposed tools

- Every message starts at

start_agent: This occurs even mid-conversation. - Instructions execute top-to-bottom: the LLM only sees the final, assembled prompt.

- Transitions are one-way: when you transition topics via

@utils.transition, you do not automatically return to the previous topic. - Wait for input after completion: The flow then cycles back to start_agent.

Note: There are ways to return to the original topic via topic delegation, which we cover in the Topic Delegation (Returns to Caller) section below.

After Reasoning: Post-Processing Hook

The optional after_reasoning block runs after the LLM completes its response. Use it for deterministic cleanup or transitions that should always occur once a topic finishes.

Unlike reasoning.actions where the LLM chooses, after_reasoning executes unconditionally if the condition is met. This is ideal for enforcing guardrails or mandatory flows after the LLM has spoken.

Topic Transitions

Moving between topics uses different syntax depending on the context:

- LLM-selected:in

reasoning.actions, use@utils.transition

The LLM sees this as an available tool and decides when to use it based on conversation context.

- Deterministic:n

reasoning.instructionsorafter_reasoning, use a baretransition tofor immediate execution.

These execute immediately once the condition is met, requiring no decision from the LLM.

Critical syntax rules:

@utils.transition to→ only inreasoning.actions- bare

transition to→ only inreasoning.instructions:->orafter_reasoning

Note: Never mix these — using the wrong syntax in the wrong place will cause errors.

Topic Delegation (Returns to Caller)

While the methods above are one-way, you can use a direct @topic.<name> reference to delegate execution to another topic. the referenced topic completes, the flow automatically returns to the original topic.

This is useful when you need another topic’s expertise but want to continue the current conversation afterward.

Conclusion

In this post, we explored the Agent Script syntax and its foundational building blocks. To explore more code samples, check out the Agent Script Recipes Sample App. In the next post, we will dive into how to use developer tools like Agentforce DX and the new Agent Builder to develop, debug, test, and deploy agents.