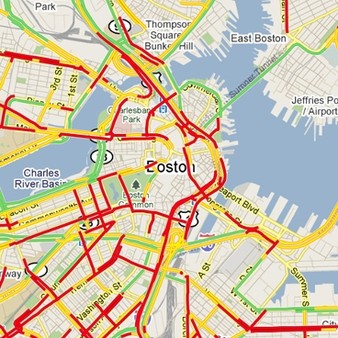

We all hate traffic. We all hate commuting. But what is most distressing is the sheer number of hours we spend traveling to and from an office, often with little or no productivity. As an avid telecommuter, it’s clear to me that there are definitely better ways to spend my time than having my blood pressure spike on the freeway, or waiting in vain for a solid WiFi signal on the subway.

Data, the lifeblood of any Enterprise, is unfortunately, no different. It is subjected to delays, traffic jams, and endless transformations before it “arrives” at its destination – ideally a “single source of truth” to provide a “360-degree view of the customer”.

As Enterprise Architects all know, untangling the kudzu of legacy systems, historical integration approaches and security perimeters is neither fun nor durable. And in all too many large IT Projects, this can only be described a brutal and frustrating endeavor. As important, consider Gartner’s prediction that integration costs will absorb well over 50% of project expenditures by 2018[1] and often take up the lion’s share of development, testing, and deployment time. And, unfortunately, such integrations typically break on the next upgrade of any of the applications in the chain.

How did we get into this mess?

What has caused this lopsided effort and near-impossible goal of providing “golden record” data to consuming systems? A few well-worn myths come to mind:

- We actually need to move the data – in many cases, the majority of data that is moved from point A to point Z is rarely even accessed. Typically, the required data elements from ERP or other transactional data boil down to a smallish number of fields. So why are we moving it all?

- We cannot touch “Transactional Systems” – for decades, it was forbidden to access live transactional systems, for fear of negatively impacting their performance. This in turn led to an entire sea of data manipulation tools, including Decision Support, Data Warehousing, Data Marts, Extraction Transform Load (ETL), etc. The end result? More complexity, more delays, and (maybe) not-so-much better data.

- We need to use our SOA layer to access everything – the heyday of Services Oriented Architectures led to massive, slow-moving SOAP-based integrations, Enterprise Service Buses, and endless abstraction.

An Approach: Good Enough Data (that Telecommutes)!

Breaking through the logjam of “surfacing” Enterprise data in a rational way can be approached through two avenues that might surprise the reader:

- What’s Good Enough? Instead of applying massive expenditures and efforts into moving data to a centralized location, instead consider designating (by subject area, geography, or operating unit) the “best” source of definitive data. This will be the source of truth for all downstream applications. In parallel, create a data roadmap that will act as a “forcing function” across your application portfolio. Over time, you can pivot to the Nirvana of “perfect” data, but in the meantime, settle for “good”. Depending on the use cases in play, this enables business applications to move rapidly, and liberates IT from “boiling the ocean”.

- Why not Telecommute? Vigorously explore data-by-reference technologies as evidenced by an industry standard that actually works, OData (heavily supported by industry leaders including Salesforce, Microsoft, and SAP). Data from OData “endpoints” are accessed through HTTP, and return references to data, not the data itself. Drilling into the result set returns tiny, up-to-date amounts of data to the target application in near real-time. Imagine combining Salesforce Opportunity data with current SAP Order data, Oracle Financials Close Rate data, or an arbitrary column of Microsoft SQL Server data, all without coding. OData customers have successfully integrated Salesforce with SAP, for example, in a single afternoon.

The Ultimate Balancing Act

Enterprise Architects are in an difficult, but enviable position: they need to balance sunken costs in critical Systems of Record while reacting to (and predicting!) the next set of capabilities needed to provide ever-increasing business value. These new “Systems of Intelligence” must blend the best of the tried-and-true lessons learned from the SOA age with a rational set of choices around mobile, wearables, and the Internet of Things (IoT) technologies – no small feat.

As Modern Architects[2] learn to leverage “good enough” data and “play the ball” (data) where it lies, they are fundamentally changing the conversation with their business partners. The shift to Systems of Intelligence is clearly underway, with integration morphing from heavy design and coding to point-and-click configuration.

Is your data ready to sit out the next traffic jam?