Deep Learning Development Cycle

You might already be familiar with the software development life cycle (SDLC). The high-level phases of the SDLC are plan, design, code, test, and deploy.

The deep learning life cycle (DLLC) has many similarities to the SDLC, but also a few key differences. If you know how the two differ, you can be prepared and plan better for your deep learning implementation. At a high level, the DLLC phases are identify the desired outcome, gather and prepare data, create the model, and test the model.

Here are some key principles of the DLLC.

Data is at the center of deep learning. Data is the means by which a model is created. So your model is only as good as the data on which it’s based. After you define your use case, or the problem that you’re solving, you must determine if you have enough data. If you don’t have enough good-quality data, the first problem to solve is how to get the data required for your implementation.

For example, you want to create a model that analyzes phrases from chat transcripts to identify what a user is trying to accomplish. To create such a model, you need existing chat data. If you implemented chatbot functionality a month ago, you might not yet have enough data to build an accurate model. If you have six months of transcripts from an active chatbot, then you might have enough data.

A lot of time is spent at the beginning gathering data. This point is a corollary to the previous principle. Even if you know that you have enough data available to create a model, chances are that data requires some processing.

If you’re working with text data, you might need to parse phrases out of a transcript. It’s likely that text data needs to be formatted in the way that the API expects it. If you’re working with image data for object detection, you need bounding box data for the items in each image.

At the start of your project, you must also define the categories or labels in which your data falls. In addition to gathering “correct” data examples to make predictions for the types of data you expect, you also want to gather “incorrect” data to create a negative label.

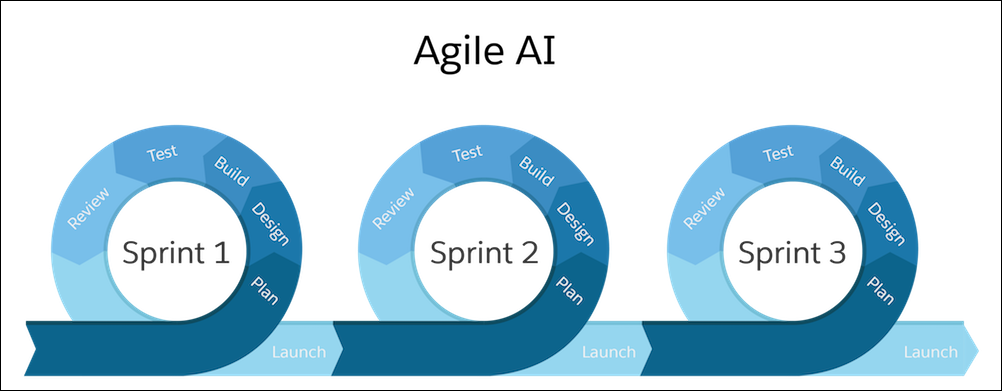

The deep learning cycle is iterative. The model creation process involves frequent loops of model creation and testing. The overall process looks like these steps.

- Use initial data to create the dataset and model.

- Test the model.

- Refine the dataset based on the model test results.

- Create a new test model, and compare to the previous models.

- When you’re satisfied with the results, create a final production model from the most recent dataset.

You repeat steps 2–4 as often as necessary until you end up with an accurate model. By using Einstein Language, you can focus on the data and quickly get a prototype model up and running. You can use these APIs to get model metrics, test the accuracy, and quickly iterate.

The deep learning cycle is iterative, even after a model is in production. After a model is in production, you continue to find ways to improve it.

For example, you might find that certain data is consistently misclassified, perhaps data that you didn’t expect to be sent for classification. In this case, you might want to add a label and the data to the dataset and retrain it.

Your business might change and require adding a new label and data to the dataset and model. Expect that your models continue to change even after they’re in production. It’s important to expect these changes so that you can allocate resources and time to maintaining the model.