At TrailheaDX in 2018, a few of us Salesforce evangelists had the brilliant idea to build a demo with robots. The idea was centered around the Fourth Industrial Revolution and showing how Robotics and Artificial Intelligence could play a big role in our lives. We wanted to show how Salesforce could be at the center of everything; your customers, partners, employees, and robots.

After the crazy success and fun we experienced with Robotics Ridge, we decided we wanted to bring it back and make it better than ever for Dreamforce 2018. This is the story of what we added to our demo, what we improved and how we overcame the challenges we faced at TrailheaDX. If you want to read more about the original demo and challenges, check out Philippe’s post.

An order fulfillment system

Robotics Ridge was an order fulfillment process with two separate pipelines. We wanted to show that an ordering process could have two simultaneous flows, similar to a real production factory. The left side and the right side of the stage each ran the same demo, but could be controlled independently.

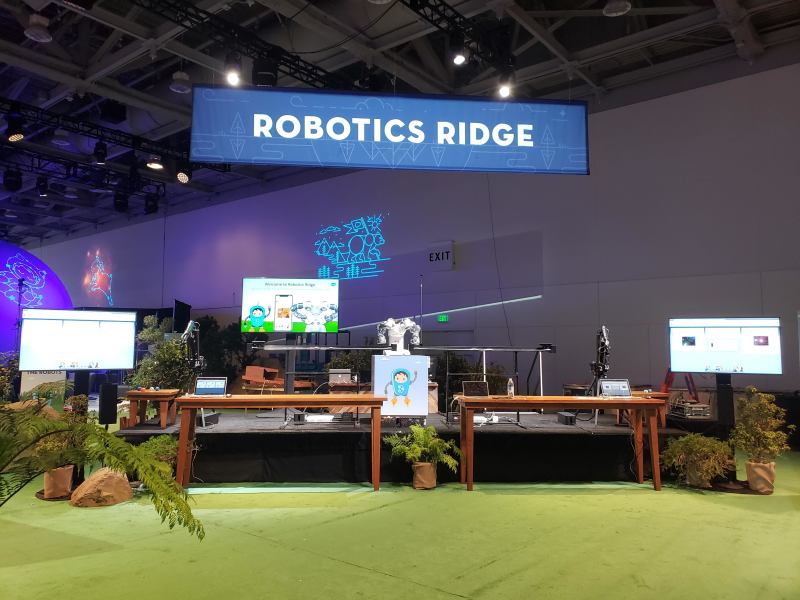

Image of Robotics Ridge before the show began.

If you look carefully at the image above, you’ll notice there are five different robots on stage. From the left to the right you will see an ARM, a custom Linear Robot, an ABB YuMi, another custom Linear Robot and a final ARM. Each of these robots worked together to pick up a requested payload and deliver it the front of the stage near the YuMi.

ARM

Image of the ARM robot on its own

The ARM robot is a robot made by the company GearWurx. It is mounted on a tripod and can be controlled in a few ways. It has a manual controller that has a slider for each degree of freedom, but we wanted the arm movements to be automated. We used a Raspberry Pi 3B+ with a servo hat. The ARM was also equipped with a Raspberry Pi Camera attached near the gripper. We wrote a Node.js app to control everything, from the movement to the picture capture.

Linear robot

Image of the Linear Robot robot on its own

Our linear robot was a custom robot we built just for TrailheaDX and then modified for Dreamforce. It is controlled by a Raspberry Pi 3B with a Pi-Plate Motor Plate for movement and an Aurdino Mega to control NeoPixel lights that would guide your eye to where your package is, all controlled via a Python script. The lights, not currently pictured, were attached to the front of the robot on the top bar near the cart. The build was inspired by the OpenBuilds Linear Actuator build and was created using Aluminum V-Slot beams from OpenBuilds.

YuMi

Image of the YuMi on its own

The YuMi is a robot from ABB. It is a human-friendly robot that has many collision sensors that keep it from hurting anything it hits. To help the YuMi see, we added a set of Raspberry Pi 3B+ with Raspberry Pi Camera to give the YuMi ‘eyes’. These were attached right on top of the YuMi. The YuMi was controlled by a Python script running on an attached computer and the ‘eyes’ were controlled by a simplified version of the ARM’s node.js app.

The demo

Our demo started with Salesforce Mobile. As an attendee, you would arrive at our booth and be directed to our Salesforce Mobile App. You would have the option to select a type of Hacky Sack for order, a Soccer ball, Basketball, or Globe.

Image of the mobile app request screen

Once you selected your item, the robots came to life! Your request on the mobile app would trigger a series of Platform Events that would serve as the driving force of the entire pipeline. The first event would tell our ARM robot to move and ‘look’ at the table for your requested item. We equipped our ARM with a camera, It would take a photo and upload it to the platform and then run Einstein Object Detection on the picture. Object detection returns a prediction confidence for what it sees, as well as the location of each item in the photo.

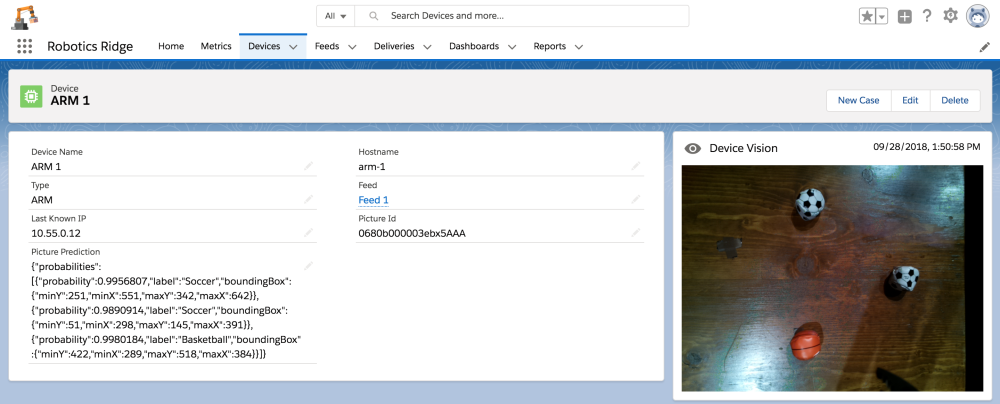

A screenshot showing the last image the ARM 1 device took at Dreamforce.

Based on its confidence, it would then move to pick up the item and transfer it to the Linear Robot. In the image above we see two soccer balls and a basketball and would have been able to pick up either if they were requested. The ARM is not a human friendly robot due to the fact that it doesn’t have any motion sensors or anything to tell it that a human is nearby. To protect the humans interacting with our demo after the ARM dropped off the requested item, it would move to a safe ‘home’ position. Once it was all the way home, it would send a platform event to let the system know it was safe to continue.

Our linear bot came next, and its job was to get the item to the YuMi. Once it reached the YuMi, it would send a platform event to Salesforce, which sent the next platform event, but this time to let the YuMi know to do its job. The YuMi would pick up the item and hold it up to a camera to take a final image. This image was then uploaded to the platform and then Einstein Image Recognition would be run on the image. Einstein would then tell Salesforce which item it saw. This served as verification step to make sure the correct item was delivered to the YuMi.

A screenshot showing the last image the YuMi 1 device took at Dreamforce

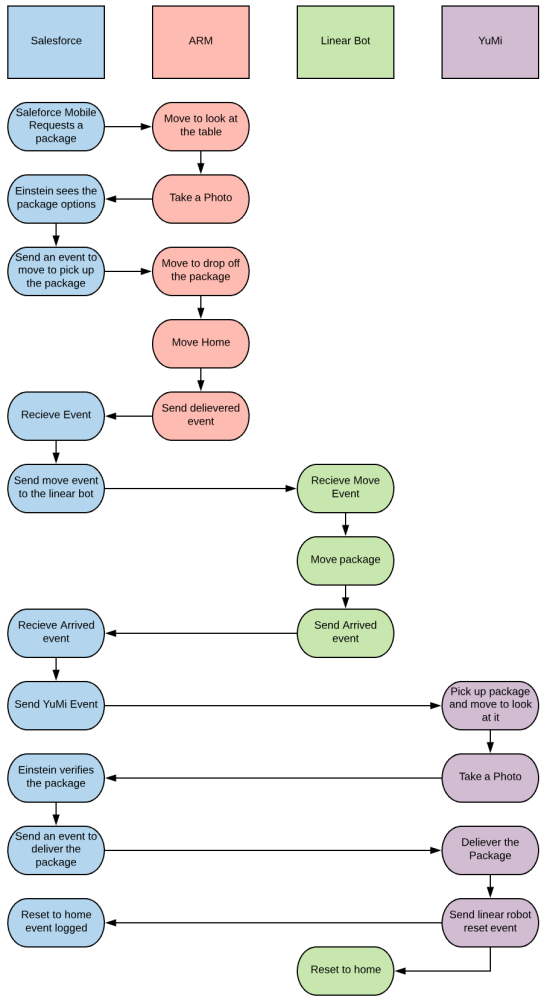

Below you can see how all of the events were sent/received between each system.

Image of the different events sent back and forth

Using Cases to troubleshoot and make our demo run smoothly

While one might hope that the demo would always run smoothly, we knew that wasn’t reality. In order to have Quality Assurance (QA), we developed a few ways for our system to let us know when things had gone wrong.

Our first use case was when an item was requested that was no longer ‘in stock.’ If Einstein Object Detection did not find the item you requested on the table, the mobile app would prompt you to add any important details and then save a case to let someone know to restock the table.

The second use case was related to the YuMi and the verification step. If something happened in the pipeline (e.g. the ARM picked up the wrong item, didn’t pick anything up, or something interfered with the linear robot), the YuMi would catch it. Not only would Salesforce Mobile prompt you to save a case, but the YuMi would also drop the item in a different area for misdeliveries.

Big Objects and reporting

Our dashboard of our over 1000 deliveries over the course of the week of Dreamforce

In our first version of Robotics Ridge, we were unable to report because platform events do not persist. To overcome this, we created an object to hold information for each delivery. Then, because we knew we would be storing a lot of information, we leveraged Process Builder to archive delivery data and event data into Big Objects.

Process Builder for event and delivery archival

Since we were able to report, we could look at the data and tell how well we were performing. Once we had set up for Dreamforce, we ran a few deliveries to make sure everything was running as planned.

Our dashboard from the day before Dreamforce.

With our report, we were able to see that our average pickup confidence was 87.5% and that we had a much harder time identifying soccer balls than the other types of payloads. We decided to take over 1000 more photos, tag them, and then retrain our model just before the Dreamforce floor opened to the public. With our newly trained model, we were able to increase our pickup confidence to 96.3% and deliver a roughly equal amount of each payload.

Challenges

Robotics Ridge was a lot of fun, but of course we faced some challenges along the way. Our biggest challenge was this time around, we had to work on an existing code base. When we started to develop our code was scattered in many locations and our Salesforce development wasn’t in Salesforce DX yet. To solve these few issues, we kept track of all of our repositories in Quip. We also migrated our Salesforce Instance to Salesforce DX. To make our migration successful, we had to write a few custom scripts. These allowed us to deploy all of our code and configuration in the right order into any new scratch org.

As with any project, we also had to balance time with feature requests. We would have loved to have more time to add a “Dance Dance 4th Industrial Revolution” event that would make the robots dance and a disco ball that could rise up from the back of the stage. Alas, time was not on our side so we were unable to make that magic come to life.

Final thoughts

Salesforce brings together all of the important things in your business: customers, partners, employees and in the future, your robots. With Salesforce, it was easy to integrate Platform Events, Einstein, Service Cloud and Analytics to show off an end-to-end pipeline. Our Robotic order fulfillment system is a great example of how you can start to leverage the many parts of the Salesforce Lightning Platform and power the future.

How can I get started?

If you want to learn how to bring the full power of the Salesforce Lightning Platform to your next project, you can learn everything you need to know on Trailhead. Get started with this Robotics Ridge trailmix and learn about all of the different features we used to power this amazing demo.

Heather Dykstra

Developer Evangelist, Salesforce

@SlytherinChika