Create a Salesforce B2C Commerce Intelligence Data Stream

Start the flow of data by creating a data stream for your Salesforce B2C Commerce Intelligence data source. The data stream creates a data lake object (DLO) in Data 360. To use data across Data 360, map the DLO to the Customer 360 data model.

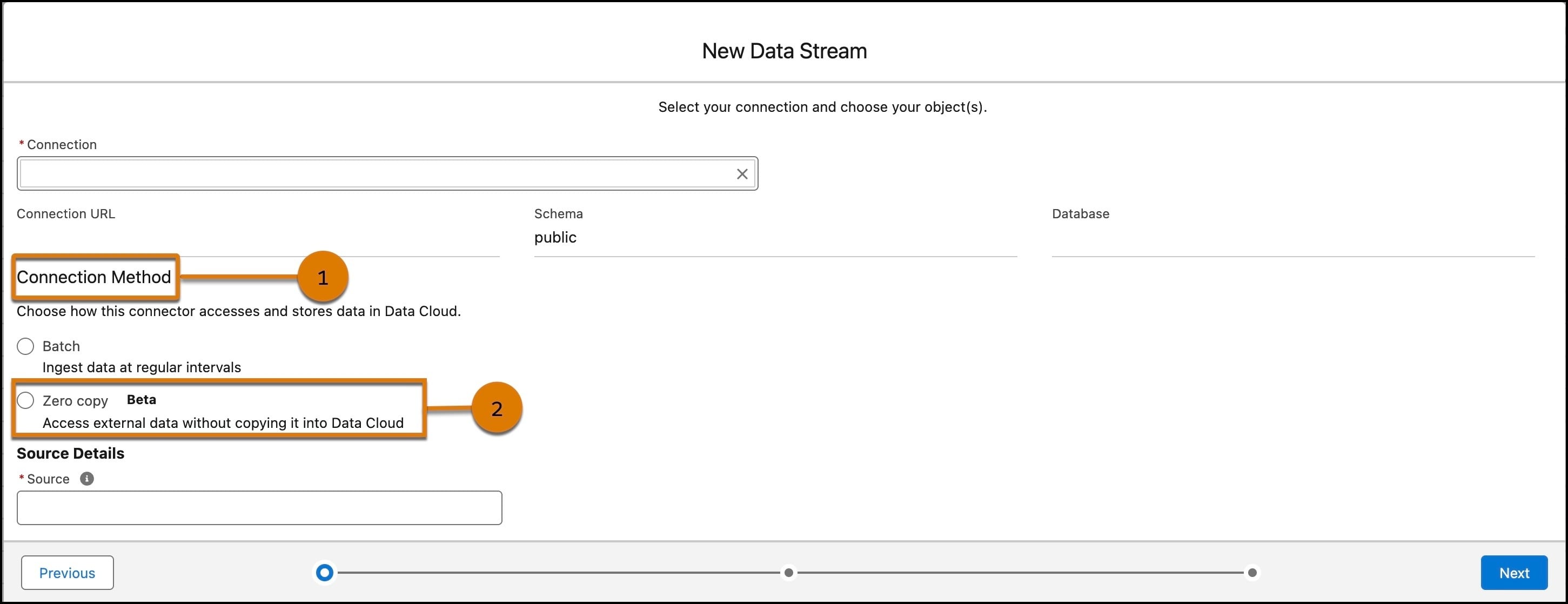

This connector supports both batch ingest and zero copy connection methods. Both batch ingest and Zero Copy Data Federation are beta features.

| User Permissions Needed | |

|---|---|

| To create a connection: | Data Cloud Architect permission set |

Before you begin:

- Set up the required Salesforce B2C Commerce Intelligence connection.

-

In Data Cloud, on the Data Streams tab, click New.

You can also use App Launcher to find and select Data Streams.

-

Under Other Sources, select the Salesforce B2C Commerce Intelligence connection source, and click Next.

-

Select from the available Salesforce B2C Commerce Intelligence connections.

-

Select the connection method (1): batch or zero copy (2).

If you select batch, follow these steps.

-

Select the object that you want to import, and click Next.

You can select one new object on the Available Objects tab.

-

Under Object Details, for category, identify the type of data in the data stream.

If you select Engagement, you must specify a value for Event Time Field, which describes when the engagement occurred.

-

For primary key, select a unique field to identify a source record. For information about fields, see B2C Commerce Data Lakehouse Schema Reference.

If a composite key is required or a key doesn't exist, you can create one using a formula field. See Create a Formula Field or Use Case 2: Create a Primary Key.

-

(Optional) Select a record modified field.

If data is received out of order, the record modified field provides a reference point to determine whether to update the record. The record with the most up-to-date timestamp is loaded.

-

(Optional) To identify the data lineage of a record's business unit, add the organization unit identifier.

-

Click Next.

-

For Data Space, if the default data space isn't selected, assign the data stream to the appropriate data space.

-

Select the refresh mode.

FIELD DESCRIPTION Full Refresh All existing data is deleted and replaced daily with the current data. Enter the data stream details. Incremental Only new data is added to the dataset. Your table must have a primary key and a datetime column to determine the date and time a row was last modified. For Record Modified Date-time, select the field to tag records that have changed. Optionally, identify the column to flag for soft deletes. -

Under Schedule, select a Frequency.

-

Click Deploy.

If you select zero copy, follow these steps.

-

Select the objects you want to connect.

-

Confirm the Source details, then click Next.

-

For each object, complete the required fields.

-

Add a label and API name for the data lake object.

-

Select a category from profile, engagement, or other.

-

Select a primary key.

-

(Optional) Select an Organization Unit Identifier from the dropdown.

-

(Optional) Select the Supported Fields to connect.

-

-

Click Next.

-

Select a data space from the dropdown.

-

Enable or disable acceleration. Acceleration uses caching to improve performance by temporarily storing data in a data lake object.

-

To disable, uncheck the box.

-

To enable, add a frequency from the dropdown and enter a time as needed. Optionally, select whether to refresh the initial file immediately.

-

-

Click Deploy.

For both connection methods, you can confirm your data stream was successful by reviewing the Last Run Status on the Data Stream record page. When the Last Run Status displays success, you can see how many records were processed and the total number of records that were loaded.

You can now map the created DLO to the data model to use the data in segments, calculated insights, and other use cases.