Set Up an Azure Blob Storage Connection

Set Up an Azure Blob Storage Connection and import objects from Azure Storage.

| User Permissions Needed | |

|---|---|

| To create Azure Blob Storage Connection: | System Admin profile or Data Cloud Architect permission set |

Complete the prerequisites.

- Get an Azure Storage account with these key settings.

- Public Access Level: Private

- Provision at least one Account key

- Set up container SAS (Shared Access Signature) token. For more information, see Create an Azure Container SAS Token.

If you have restricted access to your storage service, review the Data 360 IP Allowlist to make sure the Azure Blob Storage Connection has access to your storage service.

-

In Data Cloud, go to Data Cloud Setup.

-

Under External Configuration, select Other Connectors.

-

Click New.

-

Under Source, select Microsoft Azure Blob Storage and click Next.

-

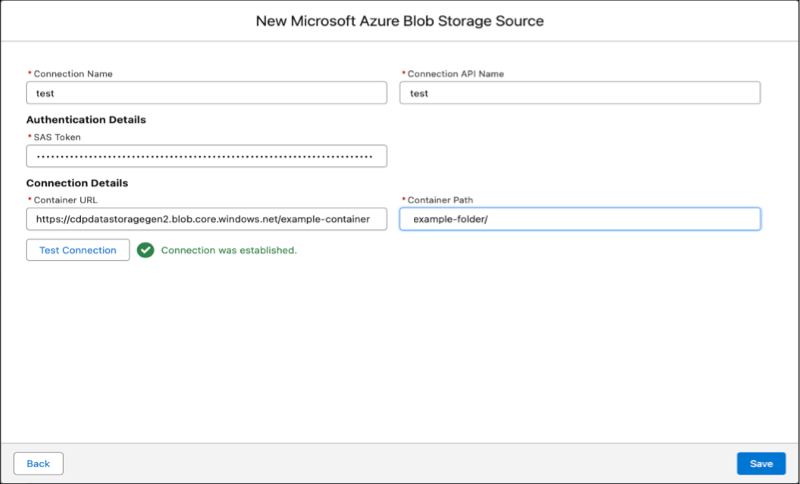

Enter a connection name, connection API name, and the authentication details.

- SAS token: SAS token to grant secure, delegated access to resources in your Azure storage account. Refer to Create an Azure Container SAS Token. You must regenerate the SAS token before its expiration or when there's a change in the Container URL or Container Path.

- Container URL: The URL to access the Azure storage container. You can get the Container URL from your container properties in your storage account. The sample format of a container URL is

https://<your azure storage account name>.blob.core.windows.net/<your azure storage container name>. - Container Path: The folder in the container from where you want to ingest the data. Container path must end with

/. Do not use a leading/. You must select a folder in the container. You can’t connect directly to the root of the container.

All folders under the container are migrated to a staging environment where the objects can then be selected for import into Data 360. Data access charges can apply, so it’s recommended that the parent directory and all of its subfolders only contain essential files.

-

To review your configuration, click Test Connection.

-

Click Save.

Data 360 syncs data between your Azure instance and the Data 360 staging environment at an interval of 15 minutes. The sync is designed to mirror the structure of your Azure Storage container, so if objects are deleted, they’re also deleted from the staging environment. Your Data Aware Specialist can then proceed with creating data streams for any objects under the parent directory. For more information, see Create an Azure Storage Data Stream.